You've seen the demo. The AI analyzes documents flawlessly, answers questions with startling accuracy, and generates insights that would take your team hours to produce manually. The technology clearly works. Leadership is convinced. The budget gets approved.

Then your team feeds the AI your actual business data, and everything falls apart.

This scenario plays out in boardrooms every week. AI models trained on pristine datasets stumble when they encounter the messy reality of enterprise information: incomplete records, inconsistent formats, data that changes faster than anyone expected, and edge cases that nobody thought to test.

The gap between AI demonstration and AI production isn't about the intelligence of the models. It's about the infrastructure that feeds, manages, and optimizes those models for real-world business conditions.

Why Clean Demos Don't Predict Dirty Data Performance

AI vendors demonstrate their technology using carefully curated datasets. Every field is populated. Formats are consistent. Quality is high. The AI performs beautifully because it's operating under laboratory conditions that rarely exist in actual businesses.

Your enterprise data tells a different story. Customer records have missing phone numbers. Product descriptions vary depending on who entered them. Data comes from systems built in different decades with different assumptions about how information should be structured. Some data updates in real-time, other information gets batch-processed overnight, and critical context lives in emails and documents that aren't connected to your main databases.

This isn't a criticism of your data management. It's the reality of business information that accumulates over time through organic growth, system changes, and the practical compromises that keep operations running.

But AI systems trained on perfect data often can't handle these imperfections gracefully. They expect specific formats, complete information, and consistent quality. When reality doesn't match expectations, AI performance degrades in ways that are difficult to predict and expensive to fix.

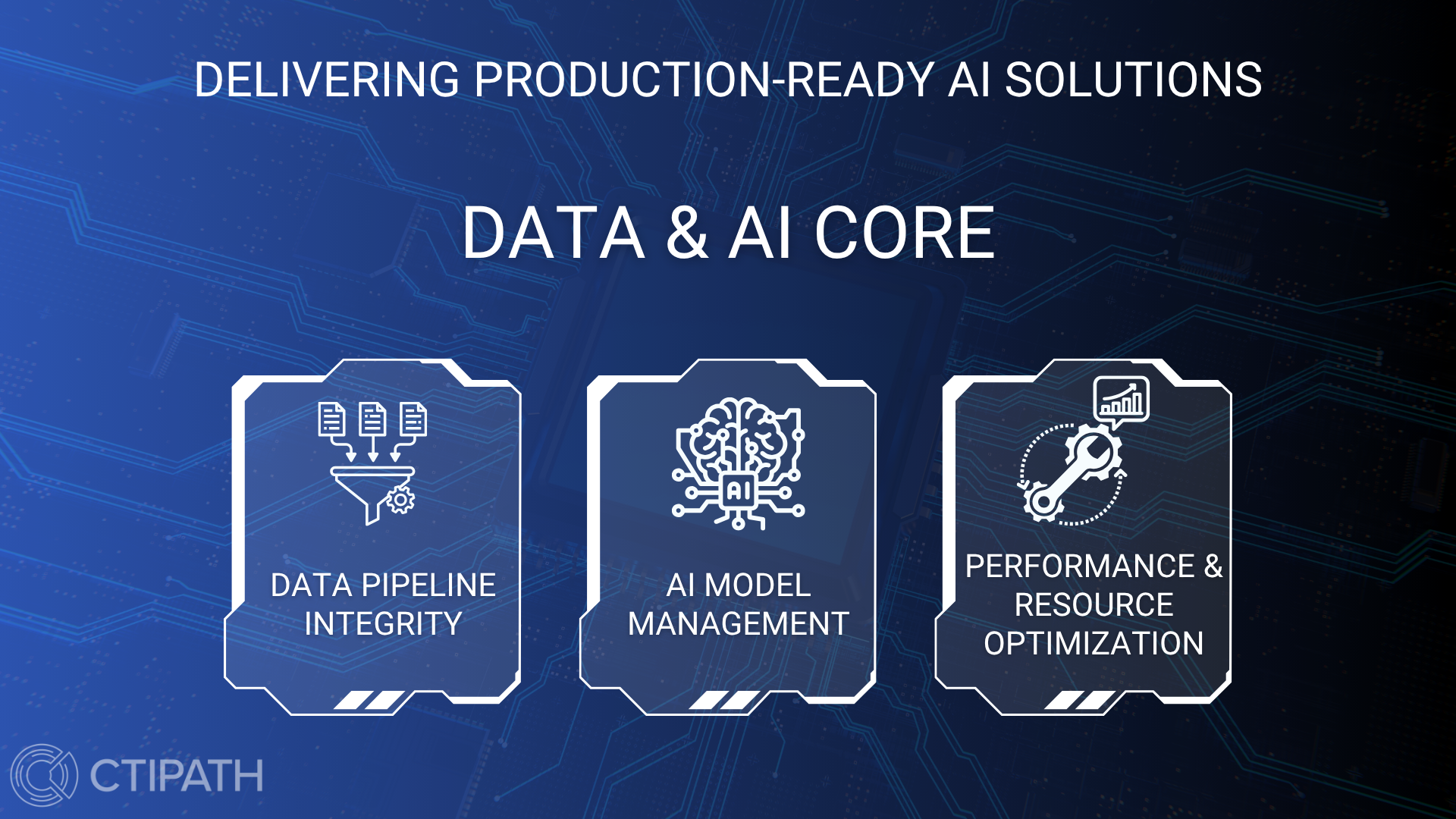

For this reason, CtiPath developed the Production-Ready AI Solutions Framework. The second part of that framework is Data & AI Core.

The Three Elements That Bridge the Demo-to-Production Gap

Successfully deploying AI with real business data requires systematic attention to three interconnected elements that most organizations either underestimate or handle as afterthoughts.

Element 5: Data Pipeline Integrity – Making Messy Data Work

Data pipeline integrity means building systems that transform your actual business data into the consistent, high-quality information that AI systems need to perform reliably.

This goes far beyond basic data cleaning. Effective data pipelines anticipate the variability in your information and handle it systematically:

Real-time quality monitoring catches problems as they happen rather than discovering them days later when AI performance mysteriously degrades. Your customer service AI starts giving poor responses, and investigation reveals that a data source began sending information in a new format three days ago.

Intelligent validation goes beyond checking whether fields are populated to understand whether the information makes business sense. A customer record shows a birth date in the future or a purchase amount that's clearly a data entry error with extra zeros.

Graceful degradation enables AI systems to continue operating when data quality issues occur rather than failing completely. If product descriptions are missing from some items, the system provides recommendations based on available information rather than returning no results.

Context preservation maintains the business meaning of information as it moves through processing steps. Financial data needs to preserve transaction dates, customer interactions must maintain context about communication channels, and operational data requires timestamps that enable accurate analysis.

The key insight: data pipeline integrity isn't about achieving perfect data quality. It's about building systems that deliver reliable AI performance despite the inevitable imperfections in real business information.

Element 6: AI Model Management – Beyond Training and Deployment

Model management addresses the complete lifecycle of AI systems operating in business environments where data patterns change, requirements evolve, and performance expectations increase over time.

Performance monitoring that matters tracks business outcomes, not just technical metrics. Your fraud detection AI might maintain 95% accuracy while gradually becoming less effective at catching the types of fraud that actually cost money. Technical performance stays stable while business value erodes.

Bias detection across real populations becomes critical when AI systems serve diverse customer bases or make decisions that affect different groups. An AI that works well in testing might produce disparate outcomes when deployed across your actual customer population.

Model versioning and rollback capabilities enable safe experimentation and quick recovery when improvements don't work as expected. You deploy an updated recommendation engine that performs better in testing but reduces customer engagement in production.

Explanation capabilities that stakeholders can understand provide transparency for business decisions, regulatory compliance, and user trust. Your loan approval AI needs to provide explanations that loan officers can discuss with customers, not technical output that only data scientists understand.

Continuous learning and adaptation keeps models relevant as business conditions change. Customer behavior shifts, market conditions evolve, and operational patterns adapt to new circumstances in ways that can gradually reduce AI effectiveness.

Model management transforms AI from experimental technology into reliable business capability that improves over time rather than degrading as conditions change.

Element 7: Performance and Resource Optimization – Sustaining AI Under Business Pressure

Performance optimization ensures that AI systems maintain consistent response times and reasonable costs as usage grows from pilot projects to enterprise-wide deployment.

Load testing with realistic scenarios reveals performance bottlenecks before they affect users. Your customer service chatbot works perfectly when three people test it but response times crawl when fifty customers try to use it simultaneously during a service disruption.

Cost optimization for variable usage prevents budget surprises as AI adoption grows. API-based AI services can scale costs unpredictably with usage patterns, token consumption, and model selection decisions that seemed reasonable during testing.

Resource scaling that matches business patterns handles the reality that AI usage rarely grows smoothly. Customer service AI sees spikes during product launches, financial analysis AI gets heavy usage during reporting periods, and operational AI faces variable loads that correspond to business cycles.

Quality-performance trade-offs enable appropriate optimization for different use cases. Executive dashboards might prioritize accuracy over speed, while customer-facing applications need to balance quality with response time expectations.

Geographic distribution becomes necessary when AI serves global users or needs to comply with data residency requirements. Performance optimization strategies that work in one region might face latency or regulatory challenges when expanded internationally.

The goal isn't maximum performance; it's sustainable performance that supports business objectives while managing costs as AI usage scales with business success.

How These Elements Work Together

Data pipeline integrity, model management, and performance optimization form an integrated system where each element enables and reinforces the others.

Strong data pipelines enable sophisticated model management because you can trust that models receive consistent, high-quality information that produces reliable performance metrics and meaningful business insights.

Effective model management supports performance optimization because you understand how your AI systems behave under different conditions and can optimize resource allocation based on actual usage patterns rather than theoretical requirements.

Performance optimization protects data pipeline integrity because systems designed for scale can handle data processing requirements that grow with business adoption without compromising quality or creating bottlenecks.

Organizations that treat these elements as separate concerns often discover integration problems during deployment. Data pipelines optimized for batch processing struggle to support real-time AI applications. Model management systems designed for development don't scale to production monitoring requirements. Performance optimization strategies that focus on technical metrics miss business cost considerations.

Questions That Reveal Data and AI Readiness

When evaluating AI initiatives, these questions reveal whether the data and AI core elements are properly addressed:

Data Pipeline Integrity: How does the system handle missing, inconsistent, or low-quality data? What happens when data sources change formats or go offline? How do you monitor data quality in real-time and ensure AI systems receive consistent information?

Model Management: How do you detect when AI performance degrades in ways that affect business outcomes? What's your process for updating models while maintaining service continuity? How do you explain AI decisions to stakeholders who need to understand and trust the results?

Performance Optimization: How does system performance change as usage grows? What are your strategies for managing costs as AI adoption scales? How do you balance AI quality with response time requirements for different use cases?

Detailed answers that address real-world complexity suggest mature approaches to data and AI management. Vague responses or focus on best-case scenarios indicate gaps that will create problems when AI systems encounter the messiness of actual business operations.

The Investment That Enables AI Success

Building robust data and AI core capabilities requires more upfront investment than rushing directly from demo to deployment. But this investment creates sustainable competitive advantages:

Reliable AI performance despite data quality variations that would break less robust systems, enabling confident deployment in business-critical applications.

Faster innovation cycles because data infrastructure and model management capabilities support rapid experimentation and deployment of new AI applications.

Predictable costs that scale reasonably with business value rather than creating budget surprises that threaten AI program sustainability.

Organizational confidence in AI capabilities that enables ambitious use cases and enterprise-wide deployment rather than limiting AI to isolated pilot projects.

Most importantly, strong data and AI core capabilities enable your organization to capture business value from AI consistently rather than experiencing the boom-and-bust cycle of impressive demonstrations followed by disappointing production performance.

Beyond the Demo: Building AI That Works with Your Data

The AI transformation is real, but capturing its benefits requires systems designed for the complexity of real business data rather than the simplicity of demonstration datasets.

Organizations that invest in data pipeline integrity, comprehensive model management, and sustainable performance optimization position themselves to deploy AI confidently across their enterprise. Those that assume clean demo performance will translate directly to messy production environments often discover expensive gaps that require complete system redesigns.

Your data isn't perfect, and it never will be. But AI systems built with proper data and AI core foundations can deliver reliable business value despite the inevitable imperfections in real enterprise information.

The question isn't whether your data is clean enough for AI. It's whether your AI approach is robust enough for your data.